docker 默认 bridge

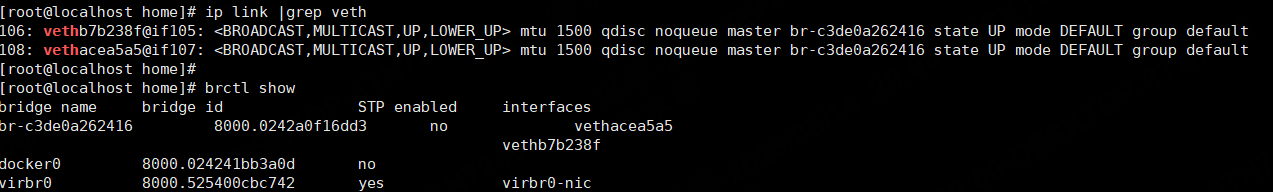

目前 C1容器内的ping -> c1 容器内的网卡eth0 -> 宿主机内的vethacea5a5-> 宿主机内的Linux Bridge -> 宿主机内Bridge 上的vethb7b238f-> C2 容器内的网卡eth0

docker exec xxxx ping 192.168.222.2 -c2 -W1

PING 192.168.222.2 (192.168.222.2) 56(84) bytes of data.

64 bytes from 192.168.222.2: icmp_seq=1 ttl=64 time=0.613 ms

64 bytes from 192.168.222.2: icmp_seq=2 ttl=64 time=0.110 ms

网络不通;

目前netfilter配置

# cat /proc/sys/net/bridge/bridge-nf-call-iptables 1 同时配置了ip_forward

当iptables被告知要干涉Linux Bridge的封包管理后,所有经过的封包都会让iptables来进行检查,但是此时配置了ip_forward = 1

当前结论是通的。

如果关闭ip_forwading;还是能ping通

sysctl -w net.ipv4.ip_forward=0 net.ipv4.ip_forward = 0 docker exec xxxx ping 192.168.222.2 -c2 -W1 PING 192.168.222.2 (192.168.222.2) 56(84) bytes of data. 64 bytes from 192.168.222.2: icmp_seq=1 ttl=64 time=0.060 ms 64 bytes from 192.168.222.2: icmp_seq=2 ttl=64 time=0.108 ms --- 192.168.222.2 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1000ms

如果此时:还是通的

echo 0 > /proc/sys/net/bridge/bridge-nf-call-iptables [root@localhost ~]# cat /proc/sys/net/bridge/bridge-nf-call-iptables 0 docker exec xxxx ping 192.168.222.2 -c2 -W1 PING 192.168.222.2 (192.168.222.2) 56(84) bytes of data. 64 bytes from 192.168.222.2: icmp_seq=1 ttl=64 time=0.048 ms 64 bytes from 192.168.222.2: icmp_seq=2 ttl=64 time=0.090 ms --- 192.168.222.2 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 999ms

如果默认 forward drop;iptables -P FORWARD DROP 是默认策略(policy),整个 FORWARD 链在无匹配规则时的最终默认处理方式。

iptables -P FORWARD DROP [root@localhost ~]# docker exec xxx ping 192.168.222.2 -c2 -W1 PING 192.168.222.2 (192.168.222.2) 56(84) bytes of data. 64 bytes from 192.168.222.2: icmp_seq=1 ttl=64 time=0.046 ms 64 bytes from 192.168.222.2: icmp_seq=2 ttl=64 time=0.088 ms --- 192.168.222.2 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 999ms rtt min/avg/max/mdev = 0.046/0.067/0.088/0.021 ms [root@localhost ~]# iptables --policy FORWARD DROP [root@localhost ~]# docker exec xxx ping 192.168.222.2 -c2 -W1 PING 192.168.222.2 (192.168.222.2) 56(84) bytes of data. 64 bytes from 192.168.222.2: icmp_seq=1 ttl=64 time=0.046 ms 64 bytes from 192.168.222.2: icmp_seq=2 ttl=64 time=0.089 ms --- 192.168.222.2 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1000ms rtt min/avg/max/mdev = 0.046/0.067/0.089/0.023 ms

bridge 需要iptable过滤打开;也正常

cat /proc/sys/net/bridge/bridge-nf-call-iptables 1 [root@localhost ~]# docker exec xxx ping 192.168.222.2 -c2 -W1 PING 192.168.222.2 (192.168.222.2) 56(84) bytes of data. 64 bytes from 192.168.222.2: icmp_seq=1 ttl=64 time=0.064 ms 64 bytes from 192.168.222.2: icmp_seq=2 ttl=64 time=0.106 ms --- 192.168.222.2 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 999ms rtt min/avg/max/mdev = 0.064/0.085/0.106/0.021 ms

此时环境下:

关闭 ip_forward、设置 FORWARD DROP、关闭 bridge-nf-call-iptables,容器之间的 ping 仍然是通的!

bridge 网络中,容器之间通过 veth pair + bridge 转发数据,内核层面是 L2 以太网转发,可能不会进入 FORWARD 链或被 ip_forward 控制。

此时 bridge-nf-call-iptables 打开 ;同时 FORWARD DROP 还是能ping通;开下bridge--->ebtale---iptable 的路径代码?

iptables -I FORWARD 1 -j DROP [root@localhost ~]# docker exec xxxx ping 192.168.222.2 -c2 -W1 PING 192.168.222.2 (192.168.222.2) 56(84) bytes of data. --- 192.168.222.2 ping statistics --- 2 packets transmitted, 0 received, 100% packet loss, time 1000ms

也就是环境里面有别的rule,会forward,即使默认drop都不行,只能在第一条插入drop才行

测试一下bridge-nfcall-iptable为0时,不调用iptable来处理,此时不涉及到iptable forward;结果是可以ping通,

[root@localhost ~]# cat /proc/sys/net/bridge/bridge-nf-call-iptables 1 [root@localhost ~]# echo "0" > /proc/sys/net/bridge/bridge-nf-call-iptables [root@localhost ~]# docker exec xxxx ping 192.168.222.2 -c2 -W1 PING 192.168.222.2 (192.168.222.2) 56(84) bytes of data. 64 bytes from 192.168.222.2: icmp_seq=1 ttl=64 time=0.046 ms 64 bytes from 192.168.222.2: icmp_seq=2 ttl=64 time=0.085 ms --- 192.168.222.2 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 999ms rtt min/avg/max/mdev = 0.046/0.065/0.085/0.021 ms

测试一下bridge-nfcall-iptable为0时,不调用iptable来处理, 此时“ebtables 默认 FORWARD DROP or ACCEPT 决定结果

[root@ubuntu22 ~]# ebtables -L Bridge table: filter Bridge chain: INPUT, entries: 1, policy: ACCEPT --log-level debug --log-prefix "ebtable/filter-INPUT" -j CONTINUE Bridge chain: FORWARD, entries: 1, policy: ACCEPT --log-level debug --log-prefix "ebtable/filter-FORWARD" -j CONTINUE Bridge chain: OUTPUT, entries: 1, policy: ACCEPT --log-level debug --log-prefix "ebtable/filter-OUTPUT" -j CONTINUE [root@ubuntu22 ~]# [root@ubuntu22 ~]# ebtables -P FORWARD DROP [root@ubuntu22 ~]# docker exec xxx ping 192.168.222.2 -c2 -W1 PING 192.168.222.2 (192.168.222.2) 56(84) bytes of data. --- 192.168.222.2 ping statistics --- 2 packets transmitted, 0 received, 100% packet loss, time 1025ms [root@ubuntu22 ~]# ^C [root@ubuntu22 ~]# ebtables -P FORWARD ACCEPT [root@ubuntu22 ~]# docker exec xx ping 192.168.222.2 -c2 -W1 PING 192.168.222.2 (192.168.222.2) 56(84) bytes of data. 64 bytes from 192.168.222.2: icmp_seq=1 ttl=64 time=0.064 ms 64 bytes from 192.168.222.2: icmp_seq=2 ttl=64 time=0.102 ms --- 192.168.222.2 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1037ms rtt min/avg/max/mdev = 0.064/0.083/0.102/0.019 ms

也就是br_forward 的时候 会涉及到bridge的forward 等链处理;

br_handle_frame 为bridge 报文input入口,根据

详细代码就不看了,就是二层交换机处理逻辑

static int nf_hook_bridge_pre(struct sk_buff *skb, struct sk_buff **pskb) { #ifdef CONFIG_NETFILTER_FAMILY_BRIDGE struct nf_hook_entries *e = NULL; struct nf_hook_state state; unsigned int verdict, i; struct net *net; int ret; net = dev_net(skb->dev); #ifdef HAVE_JUMP_LABEL if (!static_key_false(&nf_hooks_needed[NFPROTO_BRIDGE][NF_BR_PRE_ROUTING])) goto frame_finish; #endif e = rcu_dereference(net->nf.hooks_bridge[NF_BR_PRE_ROUTING]); if (!e) goto frame_finish; nf_hook_state_init(&state, NF_BR_PRE_ROUTING, NFPROTO_BRIDGE, skb->dev, NULL, NULL, net, br_handle_frame_finish); for (i = 0; i < e->num_hook_entries; i++) { verdict = nf_hook_entry_hookfn(&e->hooks[i], skb, &state); switch (verdict & NF_VERDICT_MASK) { case NF_ACCEPT: if (BR_INPUT_SKB_CB(skb)->br_netfilter_broute) { *pskb = skb; return RX_HANDLER_PASS; } break; case NF_DROP: kfree_skb(skb); return RX_HANDLER_CONSUMED; case NF_QUEUE: ret = nf_queue(skb, &state, i, verdict); if (ret == 1) continue; return RX_HANDLER_CONSUMED; default: /* STOLEN */ return RX_HANDLER_CONSUMED; } } frame_finish: net = dev_net(skb->dev); br_handle_frame_finish(net, NULL, skb); #else br_handle_frame_finish(dev_net(skb->dev), NULL, skb); #endif return RX_HANDLER_CONSUMED; }

static void __br_forward(const struct net_bridge_port *to, struct sk_buff *skb, bool local_orig) { struct net_bridge_vlan_group *vg; struct net_device *indev; struct net *net; int br_hook; vg = nbp_vlan_group_rcu(to); skb = br_handle_vlan(to->br, to, vg, skb); if (!skb) return; indev = skb->dev; skb->dev = to->dev; if (!local_orig) { if (skb_warn_if_lro(skb)) { kfree_skb(skb); return; } br_hook = NF_BR_FORWARD; skb_forward_csum(skb); net = dev_net(indev); } else { if (unlikely(netpoll_tx_running(to->br->dev))) { skb_push(skb, ETH_HLEN); if (!is_skb_forwardable(skb->dev, skb)) kfree_skb(skb); else br_netpoll_send_skb(to, skb); return; } br_hook = NF_BR_LOCAL_OUT; net = dev_net(skb->dev); indev = NULL; } NF_HOOK(NFPROTO_BRIDGE, br_hook, net, NULL, skb, indev, skb->dev, br_forward_finish); }

浙公网安备 33010602011771号

浙公网安备 33010602011771号