Spark学习 day6

对数据进行分析

1、正常的单词进行单词计数

2、特殊字符统计出现多少个

from pyspark import SparkConf, SparkContext

from pyspark.storagelevel import StorageLevel

from defs import context_jieba

from defs import context_jieba, filter_words, append_words, extract_user_and_word

from operator import add

import re

if __name__ == '__main__':

conf = SparkConf().setAppName("test").setMaster("local[*]")

sc = SparkContext(conf=conf)

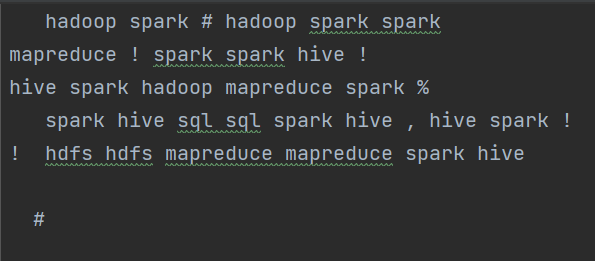

file_rdd = sc.textFile("../../data/input/accumulator_broadcast_data.txt")

abnormal_char = [",", ".", "!", "#", "$", "%"]

broadcast = sc.broadcast(abnormal_char)

acmlt = sc.accumulator(0)

lines_rdd = file_rdd.filter(lambda line: line.strip())

data_rdd = lines_rdd.map(lambda line: line.strip())

#通过空格切分

words_rdd = data_rdd.flatMap(lambda line: re.split("\s+", line))

def filter_func(data):

global acmlt

abnormal_chars = broadcast.value

if data in abnormal_chars:

acmlt += 1

return False

else:

return True

normal_words_rdd = words_rdd.filter(filter_func)

result_rdd = normal_words_rdd.map(lambda x: (x, 1)).\

reduceByKey(lambda a, b: a + b)

print("正常单词计数结果:", result_rdd.collect())

print("特殊字符数量:", acmlt)

浙公网安备 33010602011771号

浙公网安备 33010602011771号